Get Started

Create a Kafka Cluster

If you do not have a Kafka cluster and/or topic already, follow these

steps to create one.

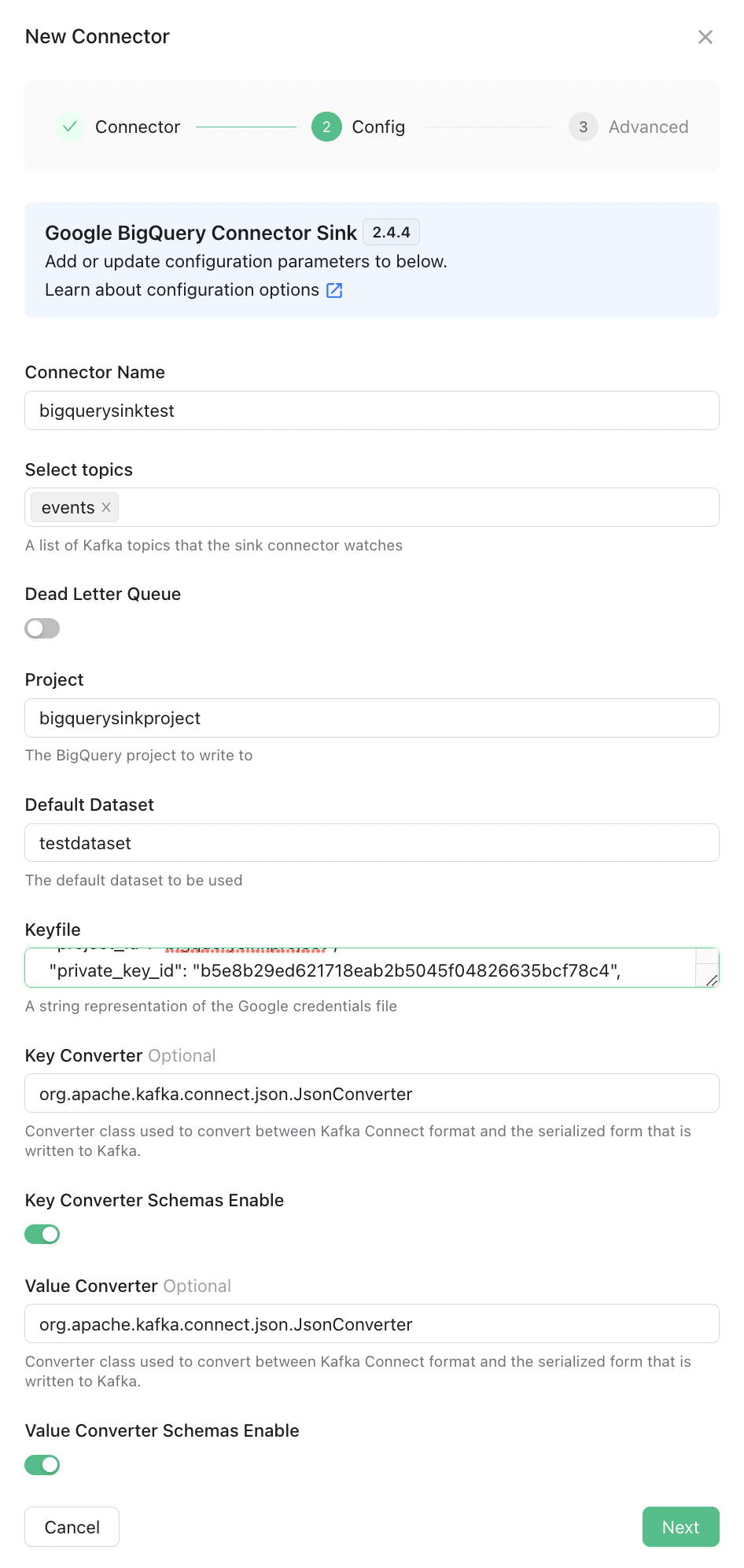

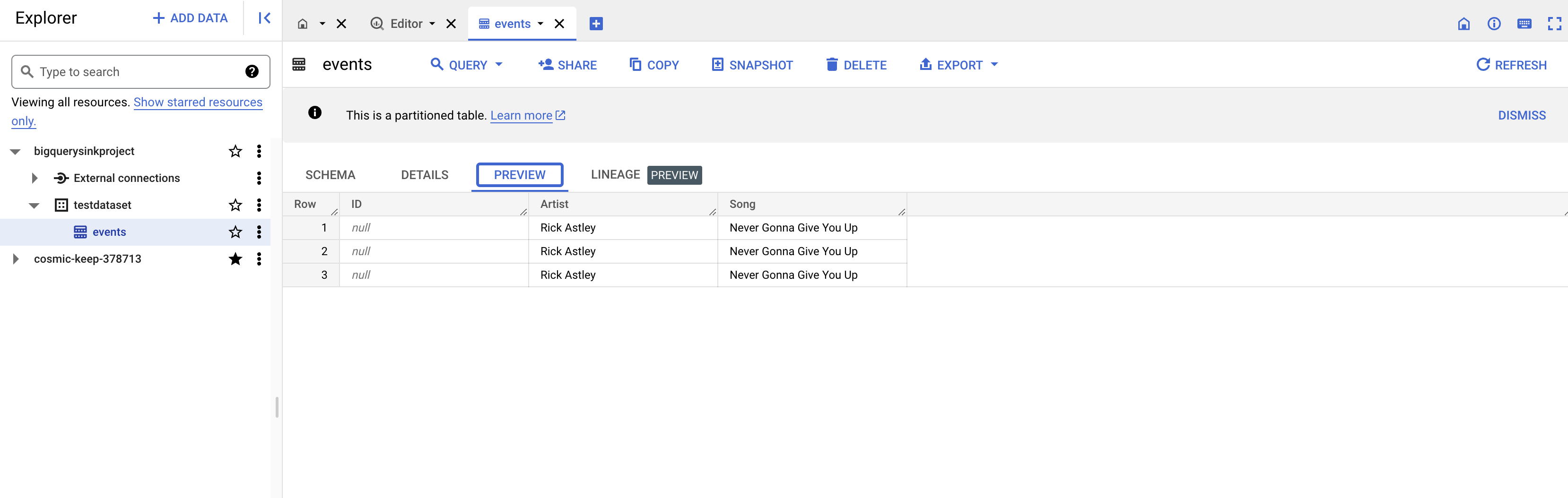

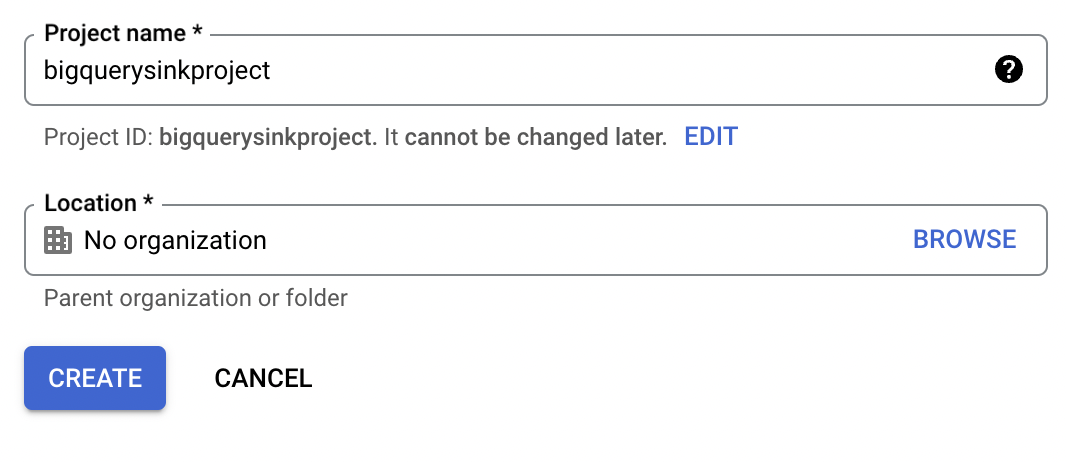

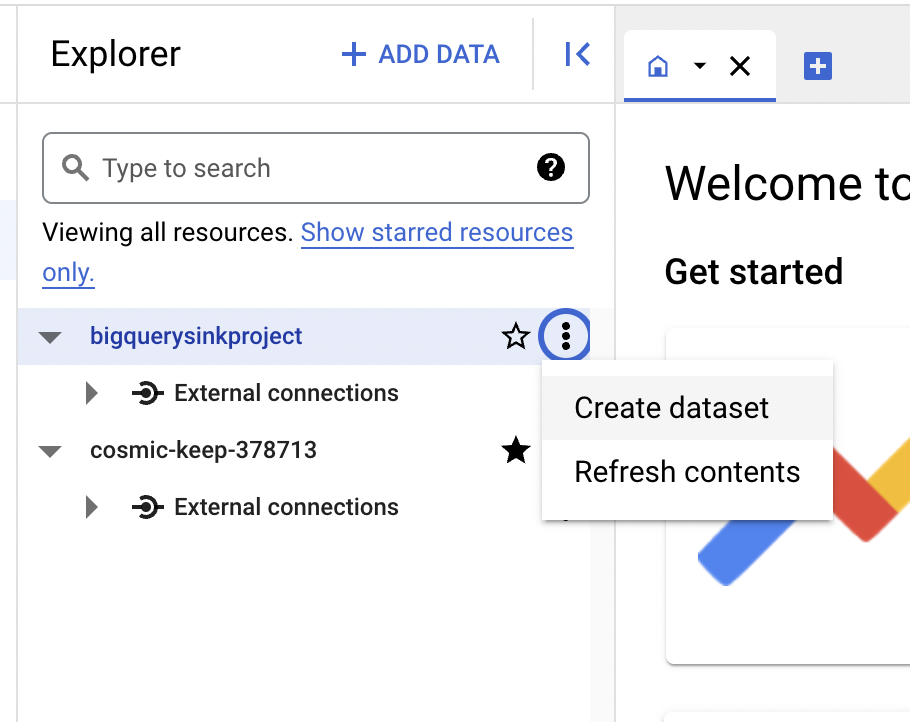

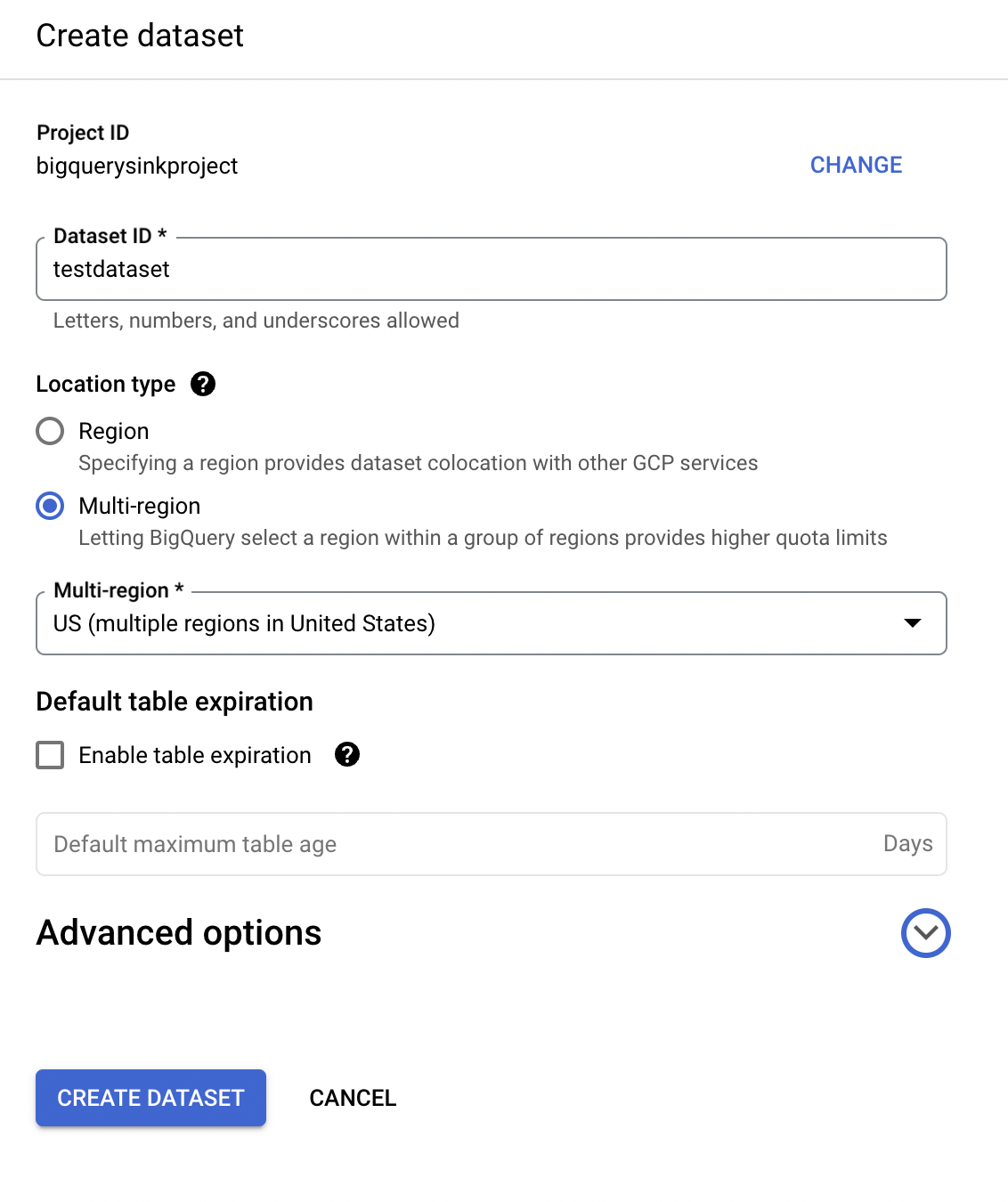

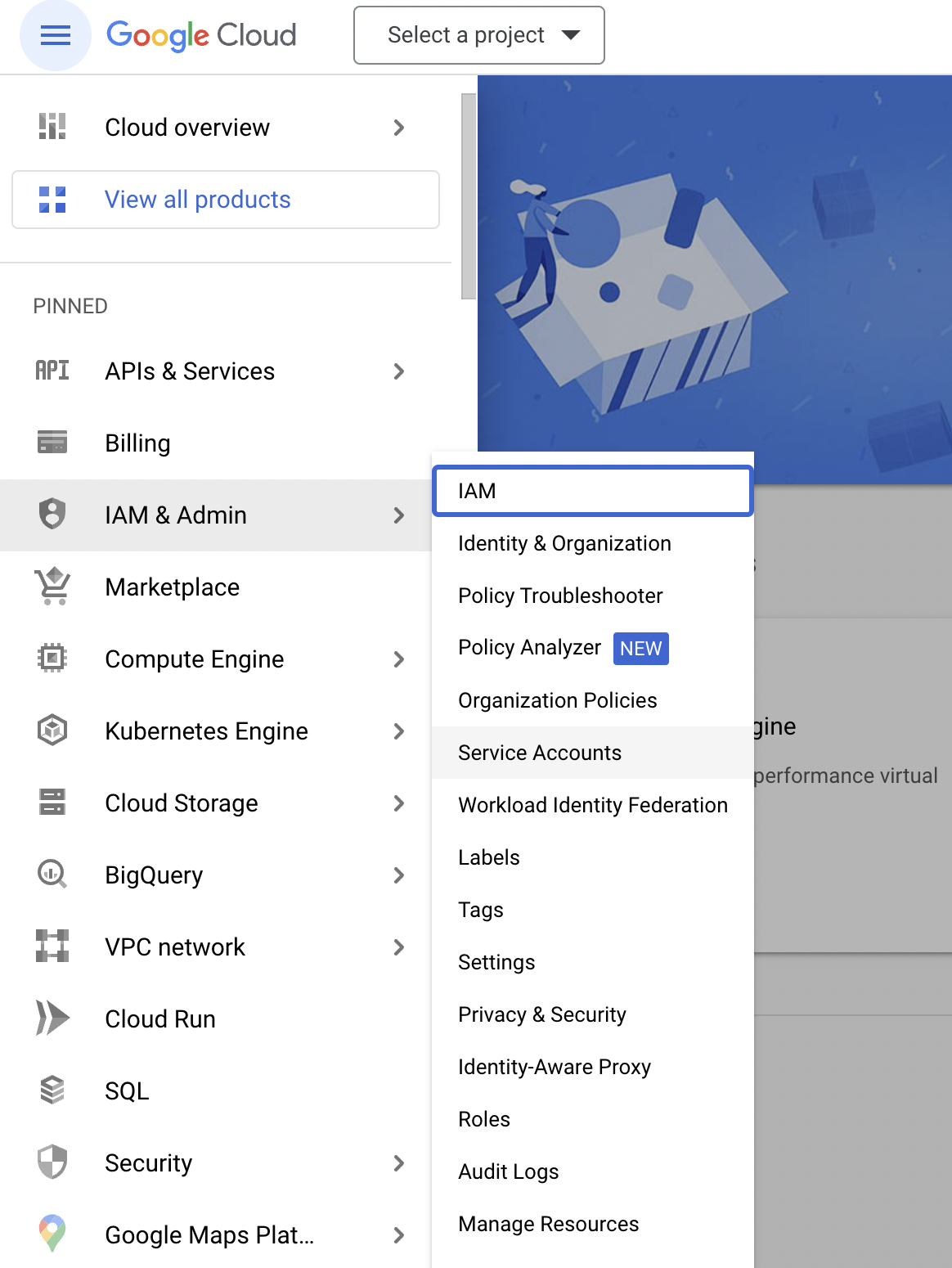

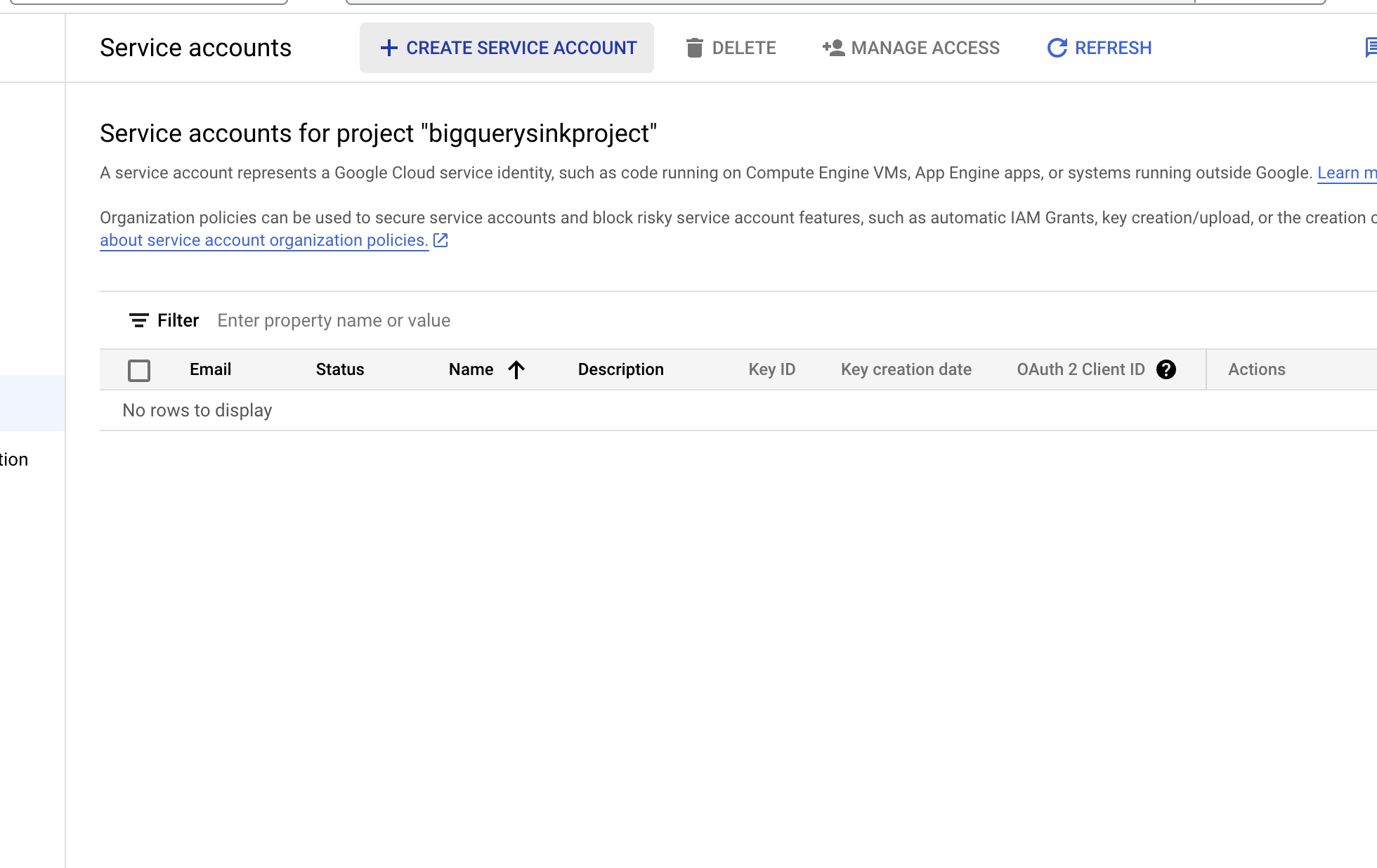

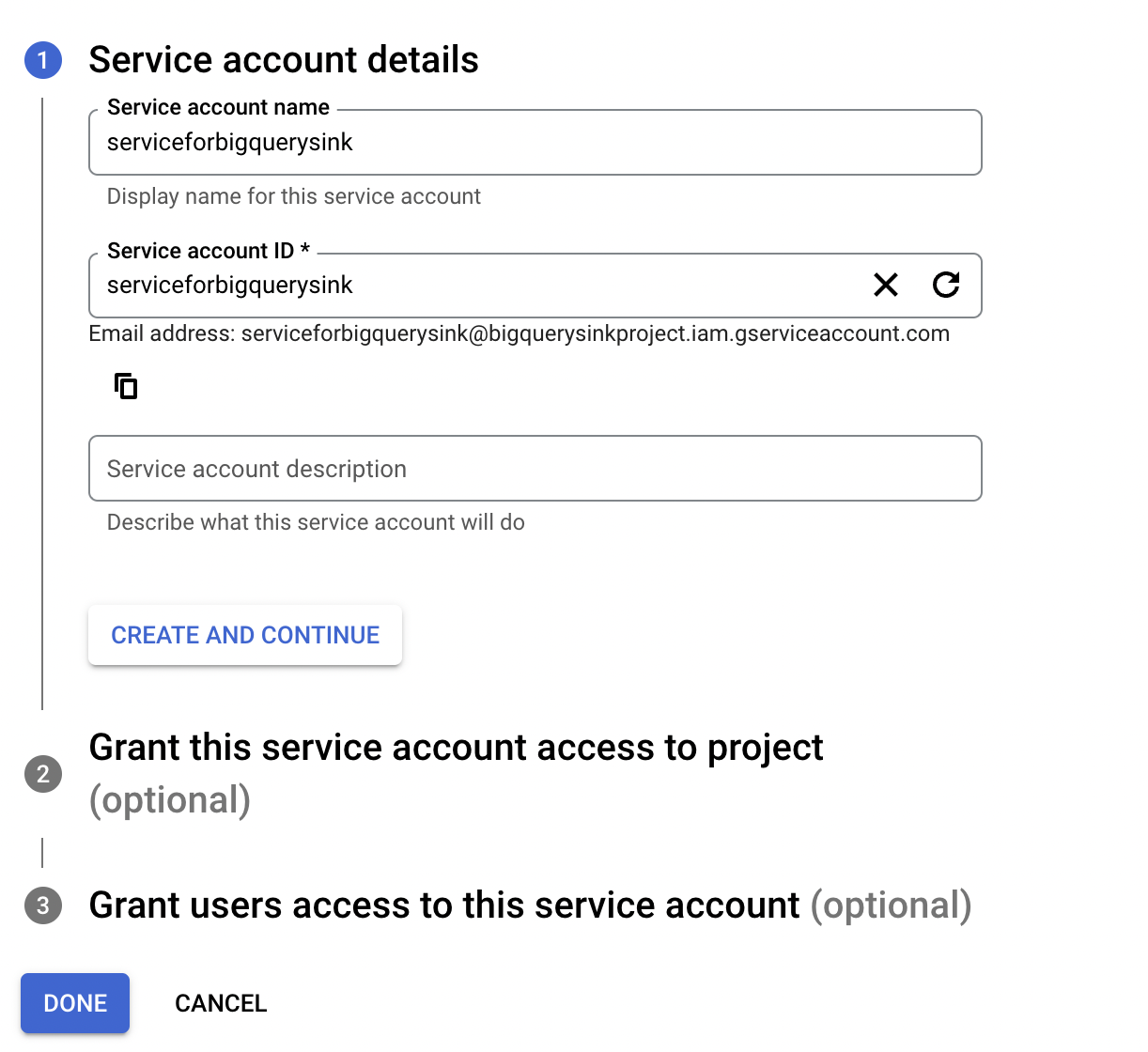

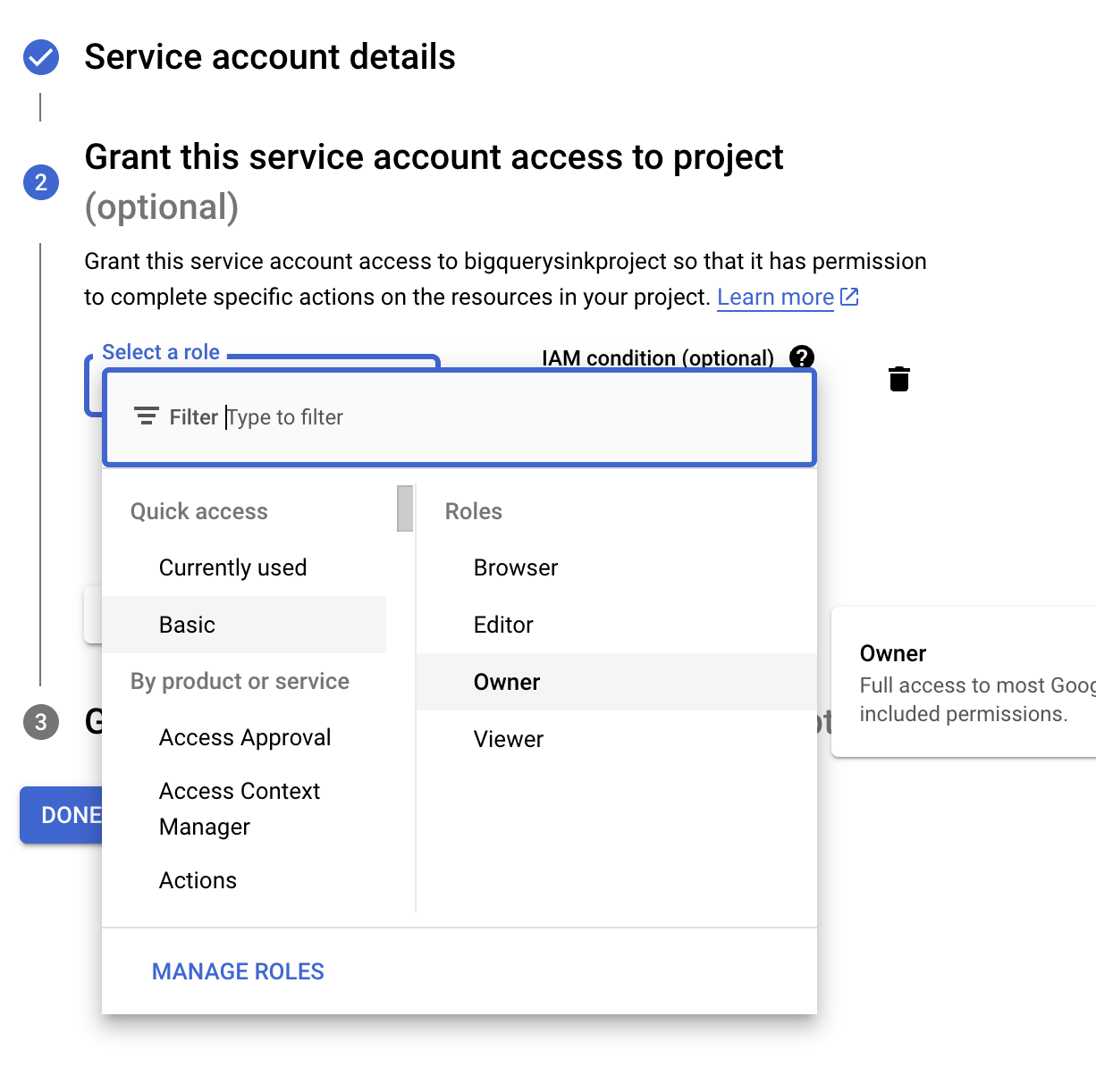

Prepare the Google BigQuery Environment

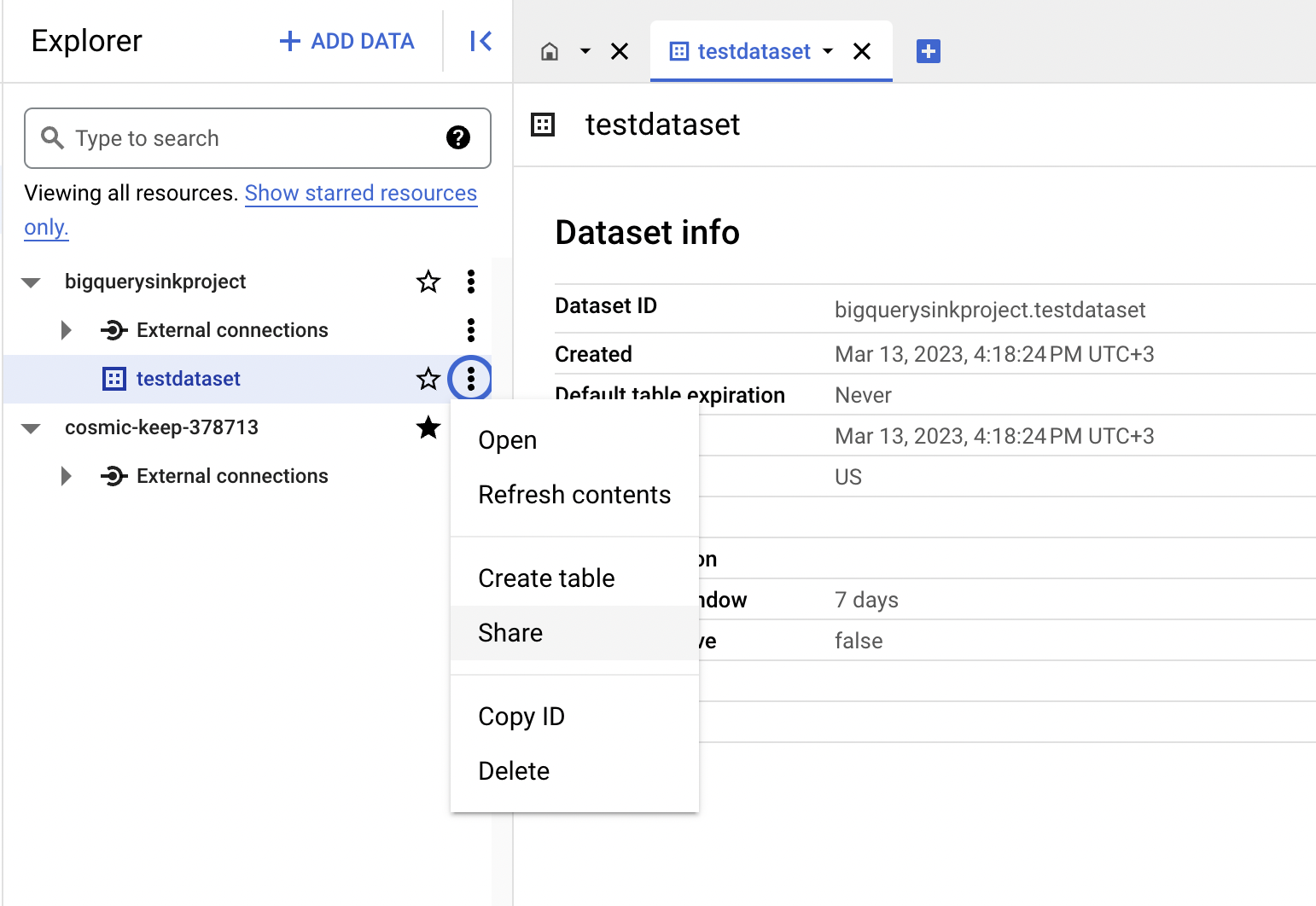

If you already have a Google BigQuery environment with the following information, skip this step and continue from the Create The Connector section.- project name

- a data set

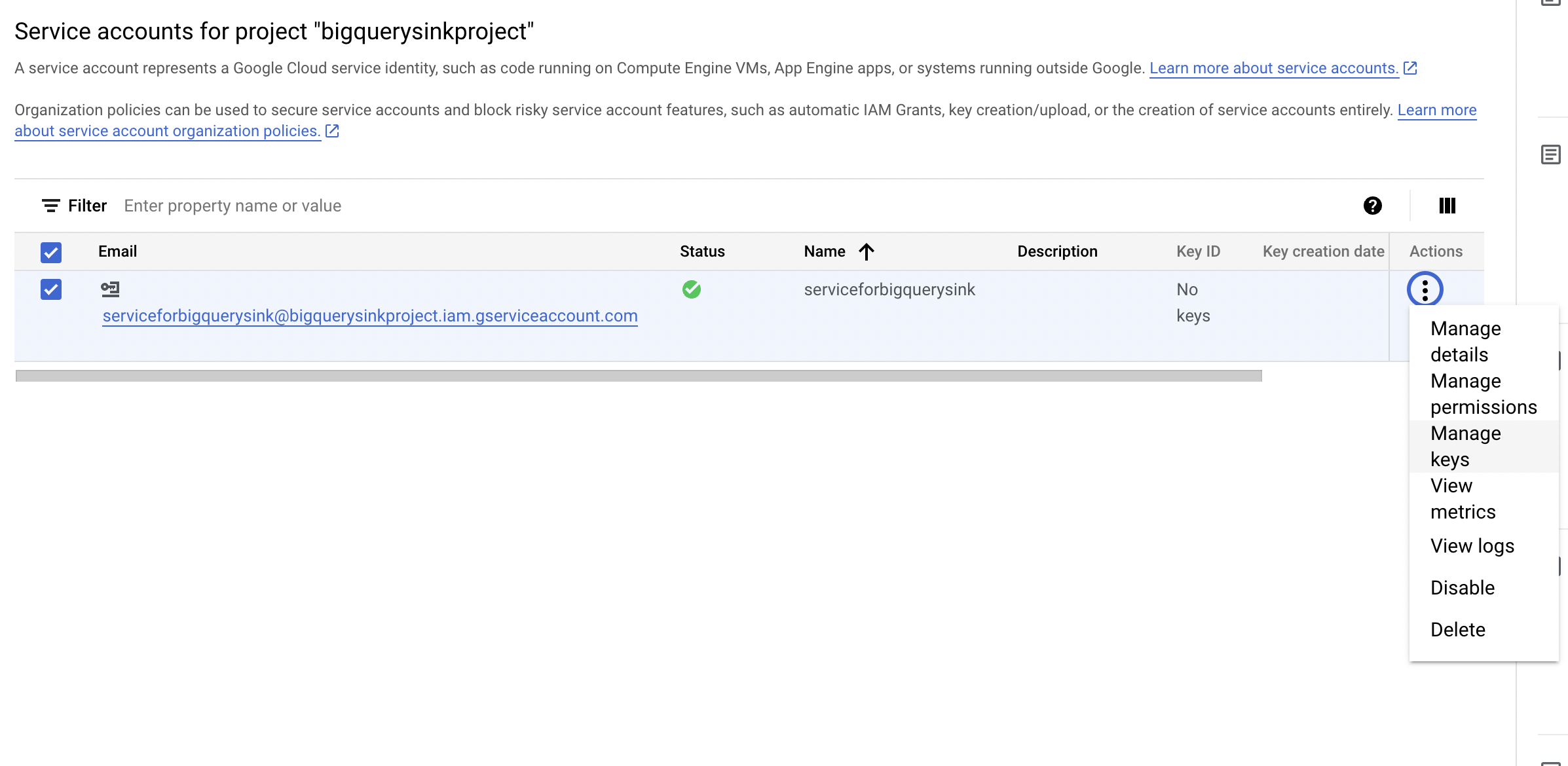

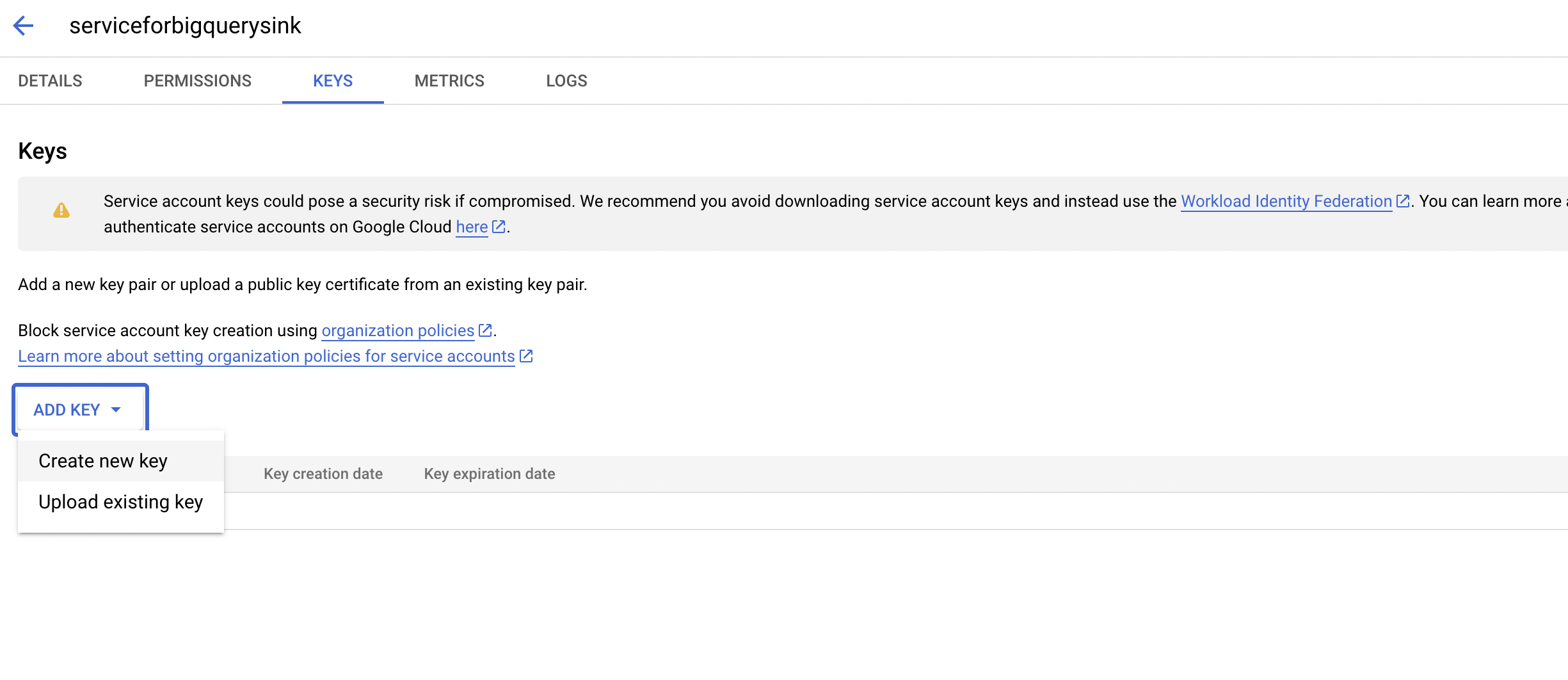

- an associated google service account with permission to modify the google big query dataset.

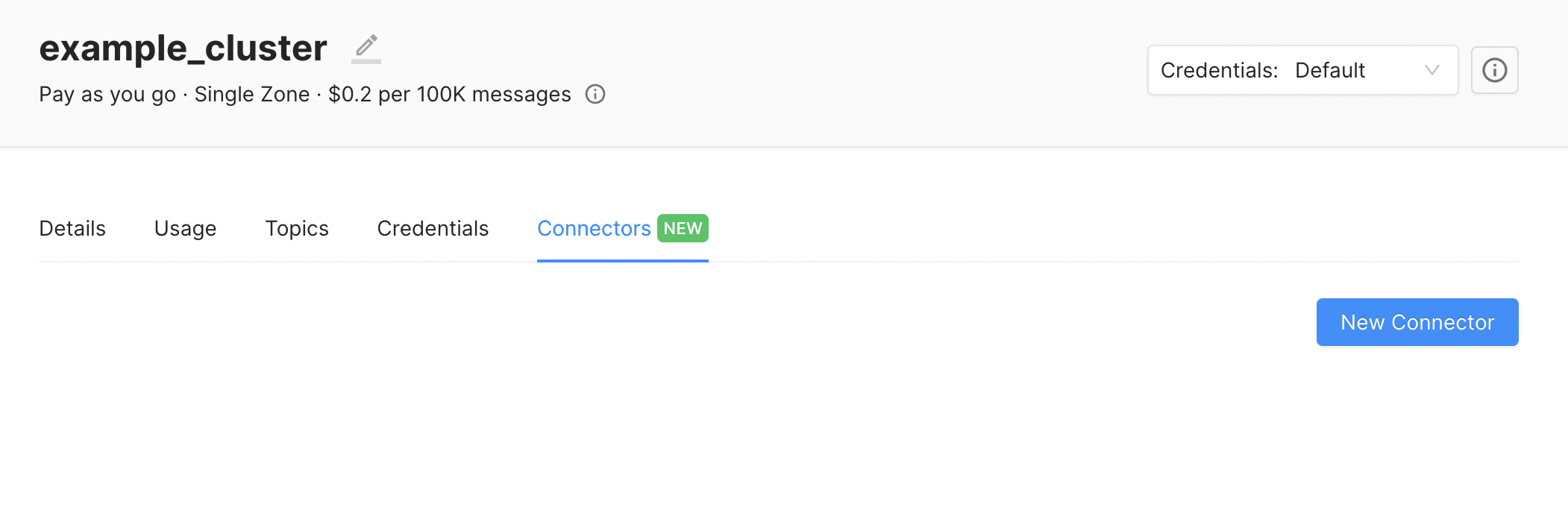

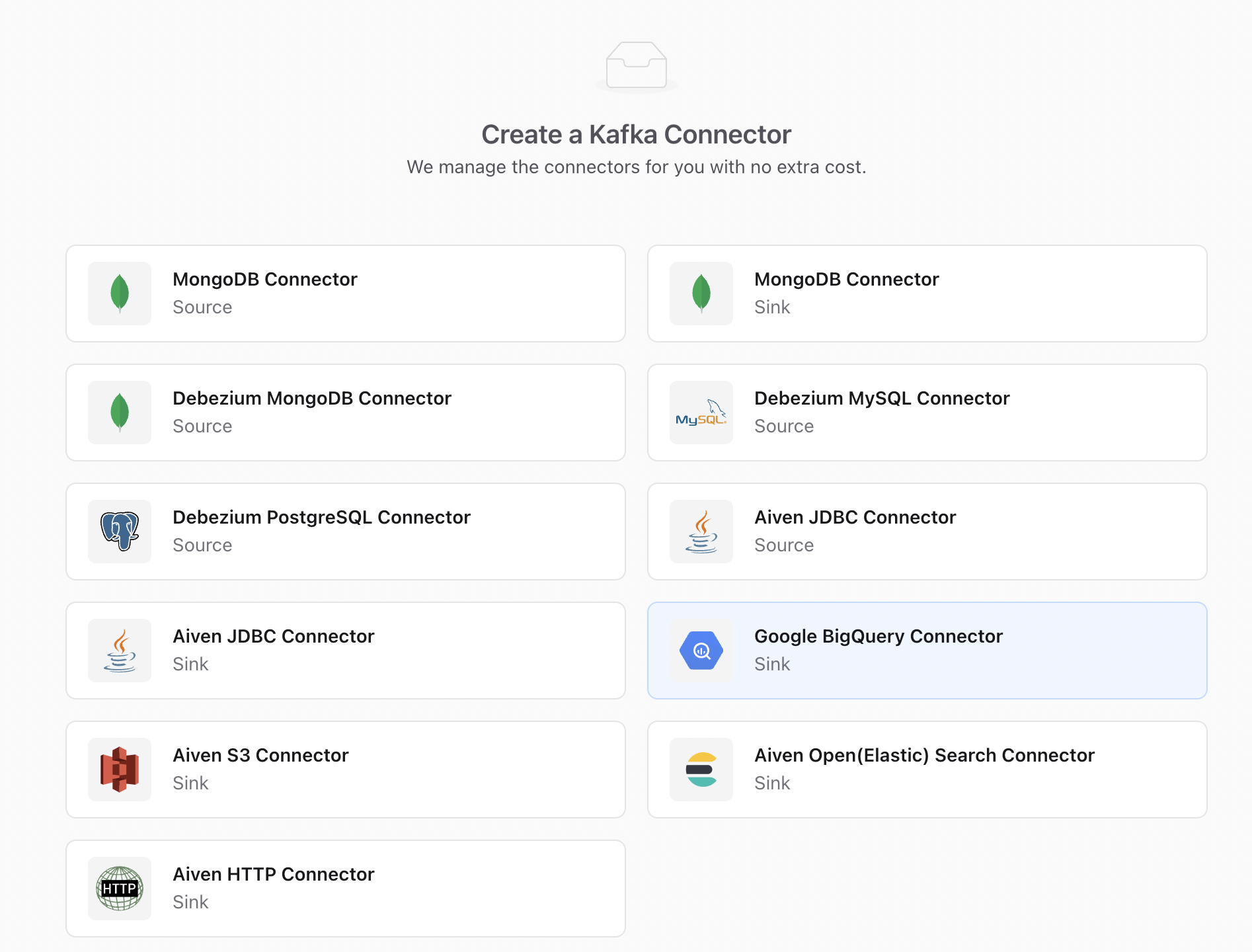

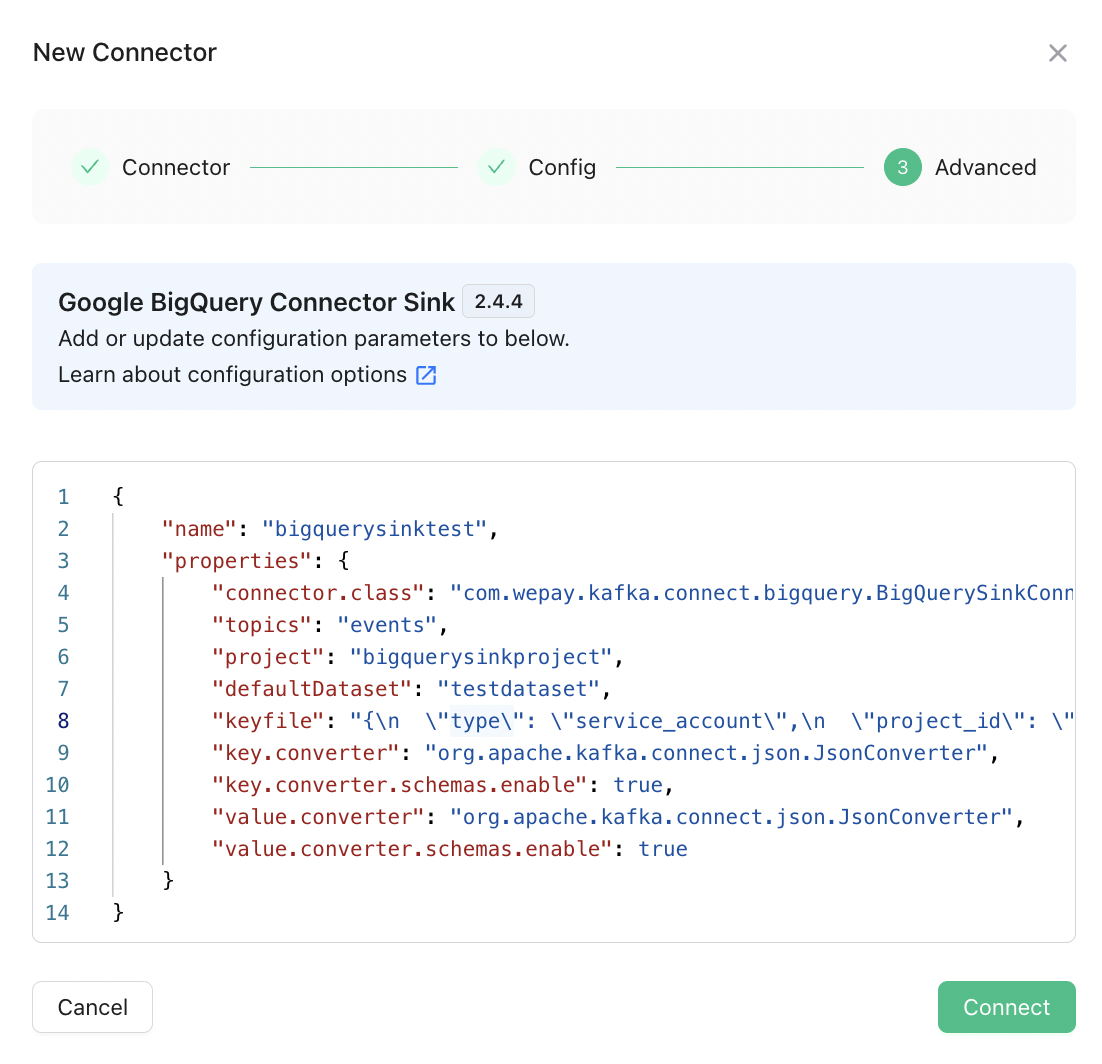

Create the Connector

Go to the Connectors tab, and create your first connector by clicking the New Connector button.